Meta’s involvement in the Israeli misinformation battle has recently come under fire, particularly due to the troubling use of algorithms in the platform’s operations.

One alarming example is the inadvertent insertion of the word “terrorist” into the profile descriptions of several Palestinian Instagram users. This unsettling development has raised concerns about the role of technology in shaping the narrative and potentially perpetuating bias.

The incident, allegedly attributed to a bug in Meta’s auto-translation feature, highlights the significant impact algorithms can have on how content is curated and presented on social media. In the context of the Israeli misinformation war, the labeling of individuals as “terrorists” can have far-reaching repercussions, from tarnishing reputations to influencing public opinion to getting someone to lose his/her job and even inciting violence.Meta has issued an apology for this incident and claims to have resolved the bug, Guardian Australia reported. However, it underscores the broader challenges of content moderation in an era where algorithms play a pivotal role in determining what information is disseminated.

Dive deeper

The distressing problem, initially brought to attention by 404 Media, impacted users whose profiles included the word “Palestinian” in English, the Palestinian flag emoji, and the Arabic phrase “alhamdulillah”. When these elements were auto-translated into English, the resulting phrase read: “Praise be to God, Palestinian terrorists are fighting for their freedom.”

A TikTok user named YtKingKhan raised awareness of this issue earlier in the week, highlighting that various combinations still resulted in the word “terrorist” when translated.

“How did this get pushed to production?” one person said.

“Please tell me this is a joke bc I cannot comprehend it I’m out of words,” another added.

“We fixed a problem that briefly caused inappropriate Arabic translations in some of our products. We sincerely apologize that this happened,” a spokesperson for Meta claimed as quoted by Guardian Australia.

‘A real concern’

Fahad Ali, a Palestinian residing in Sydney and the secretary of Electronic Frontiers Australia, expressed concerns about the lack of transparency from Meta regarding how this incident was allowed to happen.

“There is a real concern about these digital biases creeping in and we need to know where that is stemming from,” he said, as quoted by Guardian Australia.

“Is it stemming from the level of automation? Is it stemming from an issue with a training set? Is it stemming from the human factor in these tools? There is no clarity on that.”

“And that’s what we should be seeking to address and that’s what I would hope Meta will be making more clear,” he added.

A former Facebook employee who had access to conversations among current Meta employees said as quoted by Guardian Australia that the problem had significantly distressed many individuals, both within the company and in the wider public.

‘Palestinian voices are the ones getting caught up in this’

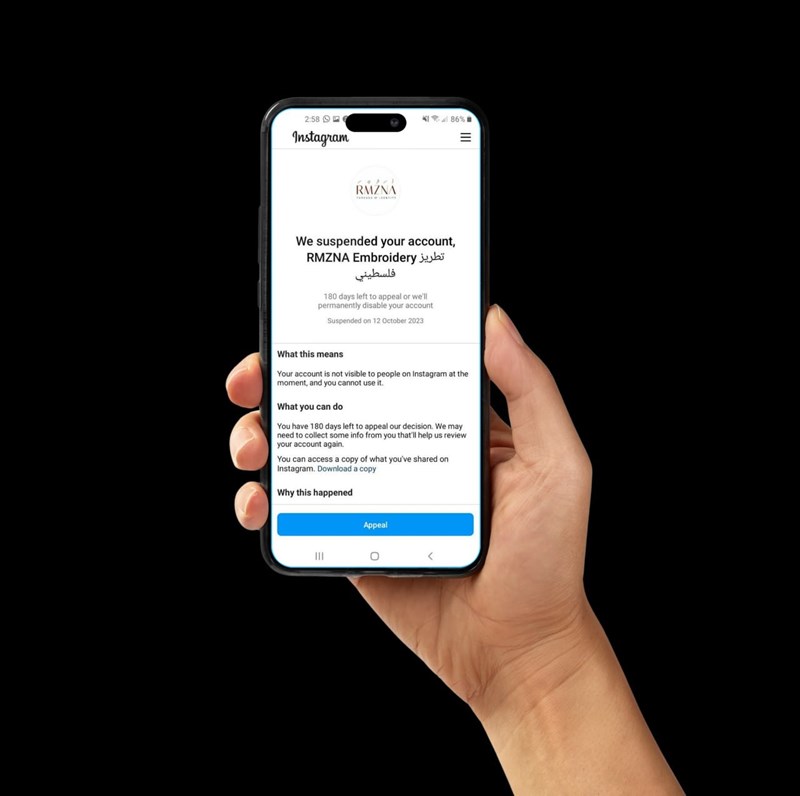

Since the beginning of the Israeli brutal aggression on Gaza, Meta has suppressed posts expressing support for Palestine on its platforms. The evidence suggests that Meta was shadow-banning accounts that shared pro-Palestine content, effectively reducing the visibility of their posts in the feeds of other users.

On this note, Dr. Hasan Youness, a university professor and researcher in international business management, told that “Meta’s shadow ban on content related to Palestine and Gaza underscores the broader challenges of content moderation in today’s digital landscape. It shows that the owners of such platforms are major participants in wars and have an impact on shaping the public opinion to fit their biased rhetoric and unfair arguments.”

“Suspending accounts deemed to be ‘affiliated with Hamas’ in an attempt to curb the spread of what they selectively deem ‘violent and hateful’ online content is the justification to show one side of the story,” Youness added.

Youness further provided hacks for users facing suspension or shadow banning:

Meanwhile, Meta claimed that they had implemented new measures to “address the spike in harmful and potentially harmful content spreading on our platforms.”

Meta further alleged that the suggestion of the company suppressing anyone’s voice is unfounded and lacks validity.

It is worth noting that many accounts have reported that their accounts were suspended or shadowbanned for posting pro-Palestine content or for reporting the Israeli atrocities during its ongoing genocide.

Sunna Files Free Newsletter - اشترك في جريدتنا المجانية

Stay updated with our latest reports, news, designs, and more by subscribing to our newsletter! Delivered straight to your inbox twice a month, our newsletter keeps you in the loop with the most important updates from our website